Technical SEO Guide: Complete Outline for Beginners

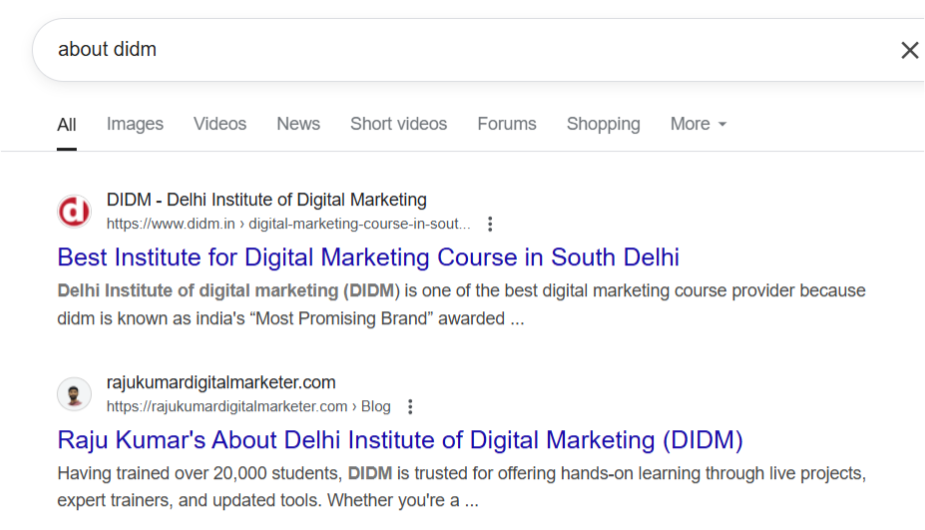

As Raju Kumar, a digital marketer and founder of Digital Marketing Marvel, I’ve worked with dozens of businesses over the past few years, and I can confidently say this: if your website isn’t technically optimized, no amount of good content or backlinks will save your SEO. That’s why I always start every project with a solid Technical SEO Guide checklist — because technical SEO is the foundation of everything we build online.

My Understanding of Technical SEO

In simple terms, technical SEO is the process of optimizing your website’s infrastructure to help search engines like Google crawl, understand, and index your content efficiently. When we take on a new client at Digital Marketing Marvel, the first thing we do is run a technical audit — and more often than not, we find critical crawl issues, broken links, or pages blocked by robots.txt. These things silently kill organic visibility.

This Technical SEO Guide is something I’ve refined from real-world experiences. From working with local businesses to nationwide ecommerce stores, I’ve seen the difference technical SEO makes. For example, one of our clients had over 300 pages blocked from indexing due to an incorrectly configured robots.txt file. We fixed that within 48 hours, and within two weeks, their impressions and clicks nearly doubled on Google Search Console.

Improving Crawlability and Indexing: My Approach

According to Ahrefs, 91% of content online gets no organic traffic — and I’d bet that technical SEO issues are a major contributor. I’ve had clients with amazing blogs that were practically invisible to Google just because they had no sitemap.xml file submitted or duplicate content issues that confused crawlers.

In every Technical SEO Guide I create, crawlability and indexing are non-negotiables. I use tools like Screaming Frog and Google Search Console to identify orphaned pages, duplicate metadata, or missing canonical tags. Just a few simple fixes here can change the game.

Why Speed and Performance Matter in My Experience

Here’s something I always tell my team: a slow website is a dead website. Google data shows that 53% of users abandon a mobile page if it takes more than 3 seconds to load — and we’ve seen that firsthand. One client in the education space had an average page load time of 6 seconds. We optimized their images, implemented lazy loading, and improved server response times. The result? A 42% drop in bounce rate and a noticeable improvement in keyword rankings within a month.

In this Technical SEO Guide, I make speed, mobile-friendliness, and Core Web Vitals a top priority. It’s not just about pleasing Google — it’s about delivering a great experience to users.

Why I Believe Technical SEO Is Non-Negotiable

If you’re just starting your SEO journey, here’s my advice: don’t skip the technical part. You could be writing the most helpful content in your industry, but without proper crawling, indexing, and performance optimization, it won’t matter.

Through this Technical SEO Guide, I want to help you build a strong SEO foundation. Over the years, I’ve seen businesses triple their traffic just by fixing technical flaws — without publishing a single new blog post or running ads.

Technical SEO is not just about fixing errors — it’s about unlocking the full potential of your content and giving search engines every reason to rank you higher.

Also Read: What is Off-Page SEO? – Complete Guide for Everyone in 2025

Why Technical SEO Guide Matters: Benefits & ROI

When I first started out in digital marketing, I used to think that content and backlinks were everything. But after auditing and optimizing hundreds of websites over the years, I learned that without a strong technical foundation, even the best marketing strategies fall flat. That’s exactly why I created this Technical SEO Guide — to show business owners and marketers how technical SEO directly impacts rankings, revenue, and ROI.

Boosting Visibility and Rankings

One of the biggest benefits I’ve seen from implementing a solid technical SEO strategy is increased visibility. I remember working with a B2B SaaS startup that had amazing blogs, well-targeted keywords, and a good backlink profile. But they were barely getting any traffic. When we ran a technical audit, we found dozens of crawl errors, missing sitemap entries, and multiple pages with duplicate meta titles.

After following the checklist from this Technical SEO Guide — fixing indexing issues, improving internal linking, and optimizing load times — their organic impressions increased by 180% in just two months. And the best part? Their conversions from organic traffic grew by over 50%, all without spending a rupee on ads.

Enhancing Security, Speed, and User Experience

Your website’s technical health isn’t just for search engines — it plays a major role in how users interact with your site too. Google has made it clear that site speed, mobile-friendliness, and HTTPS encryption are ranking signals. And as someone who runs multiple client campaigns across industries, I’ve seen the results firsthand.

One of our e-commerce clients had a site that was not using HTTPS. After switching to SSL, they saw a noticeable improvement in trust and conversion rates. Plus, their rankings for transactional keywords like “buy running shoes online” improved within 30 days. That’s why, in this Technical SEO Guide, we always include HTTPS implementation, mobile responsiveness, and page speed optimization as top priorities.

Data doesn’t lie — Google’s Core Web Vitals are now a major ranking factor, and pages that pass the CWV test are more likely to appear in the top 10 search results. That’s why technical SEO is not just a backend concern anymore — it’s user-facing.

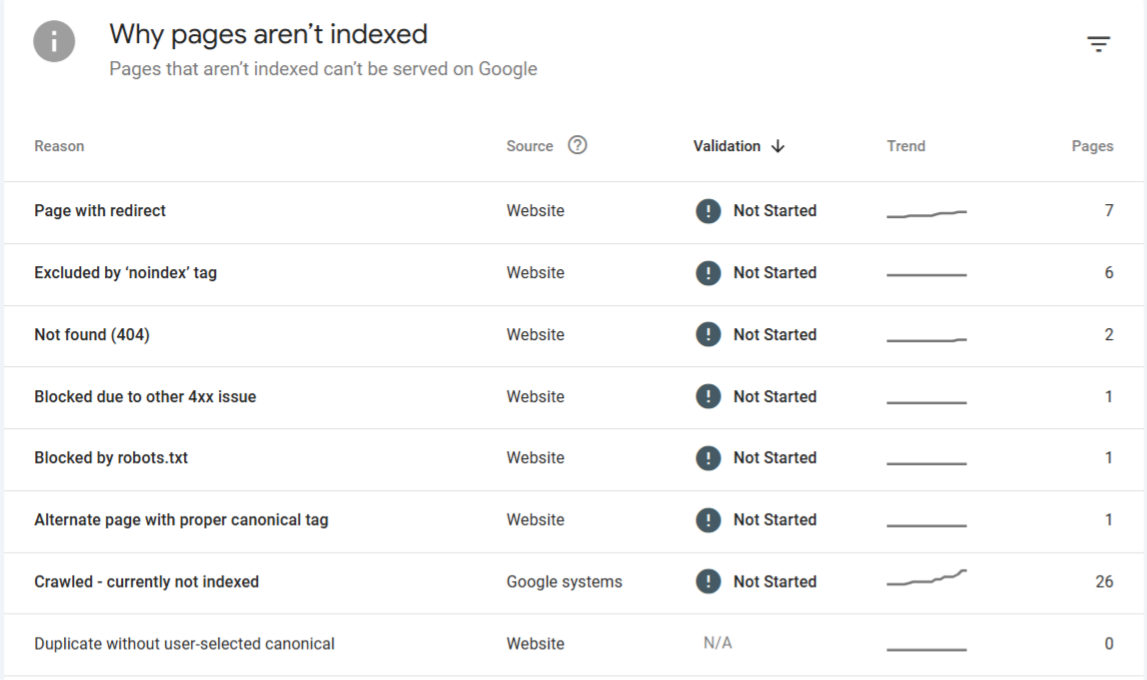

Preventing Indexing and Crawl Issues

If search engines can’t crawl or index your pages properly, your entire content strategy can go to waste. I’ve seen businesses invest lakhs in content creation, only to find out months later that important pages were blocked in the robots.txt file or had conflicting noindex tags.

One case that stands out was a real estate portal we audited last year. They had more than 500 listings, but Google was indexing only 60–70 of them. The issue? A misconfigured canonical tag that pointed every listing page back to the homepage. We fixed it, submitted the sitemap again, and within three weeks, Google indexed 5x more pages, significantly boosting long-tail traffic.

That’s why this Technical SEO Guide focuses so much on crawlability and indexation — they’re the building blocks of search visibility.

From my experience, technical SEO offers one of the highest ROIs in digital marketing. It doesn’t require paid ads, and once implemented correctly, the effects are long-lasting. This Technical SEO Guide is designed to help you capture that value early on, avoid costly mistakes, and build a strong SEO foundation that supports long-term growth.

When I take on a new SEO client, the very first thing I check is whether search engines can crawl and index their website properly. You’d be surprised how often I find critical errors like blocked resources, missing sitemaps, or broken canonical tags. That’s why this section of the Technical SEO Guide is so important — if your pages aren’t crawlable or indexable, your SEO efforts will never deliver real results.

How Search Engines Crawl and Index Websites

Search engines like Google use bots (or crawlers) to discover content across the web. These bots follow links, read code, and try to understand what your site is about. Once they crawl a page, they decide whether or not to index it — in other words, to include it in Google’s search results.

From my own experience, one of our local business clients had dozens of well-written service pages, but only a handful were showing up in search. Why? Because their navigation wasn’t properly linked, and there were no internal links guiding bots to those deeper pages. After restructuring the internal links and updating the sitemap, their indexed pages doubled within a month — all thanks to following the principles laid out in this Technical SEO Guide.

Importance of robots.txt and sitemap.xml

The robots.txt file is like a rulebook you give to search engines — it tells them what parts of your site they’re allowed to crawl. On the other hand, the sitemap.xml file acts as a roadmap, listing the URLs you want bots to discover.

I once audited a health blog that accidentally blocked its entire blog directory in the robots.txt file. As a result, none of their articles were appearing on Google. We corrected the file and resubmitted the sitemap, and within two weeks, they saw a 70% increase in indexed pages.

In every Technical SEO Guide we prepare at Digital Marketing Marvel, configuring and testing these two files is a top priority. You can’t expect Google to rank your pages if you haven’t clearly told it where to look.

Role of Meta Robots Tags and Canonical URLs

Meta robots tags give specific instructions to crawlers — like whether to index a page or follow its links. This is especially useful when dealing with thank-you pages, search filters, or admin pages you don’t want in search results.

Canonical URLs help solve duplicate content issues by pointing search engines to the preferred version of a page. This is critical for e-commerce sites where product pages may exist in multiple categories or filters.

We had a client in the fashion niche with hundreds of duplicate product URLs because of filter-based sorting (like color and size). Google was getting confused, and their ranking pages kept changing. By applying proper canonical tags — which we outlined in our internal Technical SEO Guide — we helped stabilize their rankings and improve keyword consistency.

In my experience, fixing crawl and index issues is one of the fastest ways to boost a site’s performance. You don’t need to write new content — you just need to make sure Google can properly find and understand what’s already there. And that’s exactly what this Technical SEO Guide helps you achieve.

A strong crawl and index strategy ensures your efforts aren’t wasted. If search engines can’t access or trust your site’s structure, you’re missing out on massive SEO gains — and that’s a risk no smart marketer should take.

When I first began optimizing websites as a digital marketer, I used to underestimate how much of a role site structure plays in SEO performance. But after working on more than 100 website audits at Digital Marketing Marvel, I’ve learned that poor structure often leads to poor rankings — even when everything else seems perfect. That’s why in this section of the Technical SEO Guide, I want to walk you through how to set up your site architecture and URL structure for long-term SEO success.

Logical, Flat Site Architecture

A flat site architecture simply means that any page on your website should be accessible within three clicks from the homepage. This improves crawl efficiency and ensures that no page is buried too deep for Google to find.

For example, one of our clients in the education industry had dozens of blog posts that were five or six levels deep from the homepage. Google crawlers weren’t reaching those URLs effectively, and their organic performance suffered. We reorganized the blog under clear categories and added them to the top navigation. Within 30 days, their blog traffic increased by over 60%, purely because we made the structure flatter and easier to crawl.

That’s the power of structure — and it’s why this Technical SEO Guide treats site architecture as a fundamental pillar of SEO.

Best Practices for Internal Linking and URL Design

Internal linking is like building bridges across your content. When done right, it helps search engines understand your content hierarchy and improves the flow of link equity across your pages. I always recommend placing internal links in your primary content — not just in the sidebar or footer — and using descriptive anchor text.

Take a real-world example: we worked with a SaaS brand that had excellent product documentation but no internal links from their main feature pages. After adding 30–40 strategic internal links using keyword-rich anchor text, the documentation pages saw a 40% rise in organic traffic within weeks. It’s one of the simplest fixes in the Technical SEO Guide, yet so many people overlook it.

When it comes to URL design, clean and readable URLs are non-negotiable. I always recommend:

- Using lowercase letters

- Including relevant keywords

- Avoiding numbers, special characters, or dynamic parameters

For example, instead of using:

www.example.com/page?id=123

use:

www.example.com/seo-services

Google themselves recommend simple, structured URLs — and I’ve seen the difference in CTRs and crawlability first-hand.

Breadcrumb Navigation and Hierarchy

Breadcrumbs are a subtle but powerful navigation tool — both for users and search engines. They show where a user is on your site and help Google understand your content hierarchy.

We implemented breadcrumb navigation for a large e-commerce brand with thousands of product pages. After doing so and marking up breadcrumbs with structured data, we saw enhanced listings in the SERPs (with visible breadcrumb paths) and an improvement in average position for several mid-funnel keywords.

That’s why in every Technical SEO Guide I create for clients, breadcrumb navigation is listed as a must-have — especially for large websites with multiple categories and subcategories.

A clean, logical structure doesn’t just help with SEO — it improves user experience, lowers bounce rates, and boosts engagement. In my years of working on website architecture, I’ve seen sites go from invisible to dominant just by fixing their structure and internal pathways.

If you follow the principles outlined in this Technical SEO Guide, you’re not just optimizing for Google — you’re making your website easier to use, navigate, and grow.

Also Read: What is On-Page SEO? Error & How to Fix it?

Technical SEO Guide to Site Speed & Performance

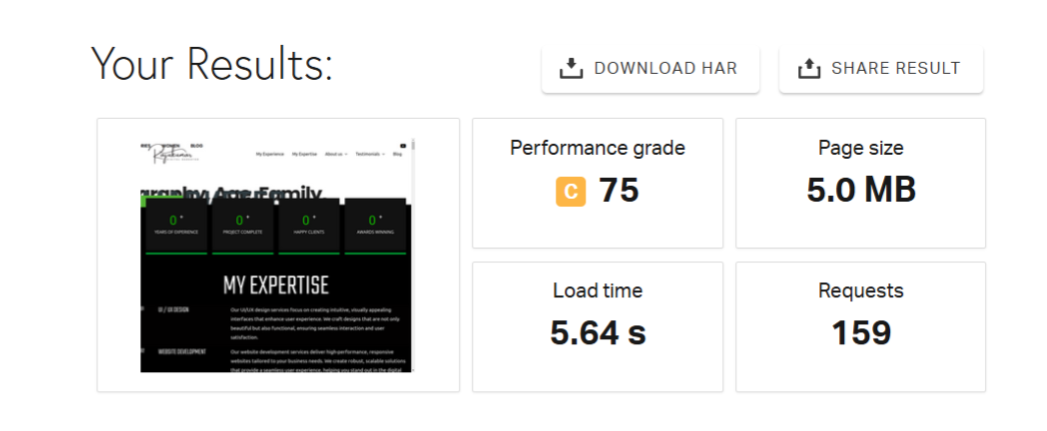

When I first started Digital Marketing Marvel, one of my biggest learning moments was realizing how heavily site speed impacts not just SEO but user retention and conversions. A slow website loses traffic, rankings, and trust — no matter how great the content is. That’s why this section of the Technical SEO Guide focuses entirely on speed and performance optimization.

Importance of Page Speed in SEO

Google has officially confirmed that page speed is a ranking factor — both on desktop and mobile. And the impact is real. According to Google’s research, 53% of mobile users will abandon a site if it takes more than 3 seconds to load. Let that sink in. More than half of your potential audience could leave before they even read your headline.

We had a client in the hospitality space whose website took over 7 seconds to load. After we applied the improvements from this Technical SEO Guide, including reducing server response times and compressing images, we brought the load time down to under 2.5 seconds. The result? A 38% drop in bounce rate and a 30% increase in organic leads in just a month.

Page speed isn’t just about technical bragging rights — it directly affects revenue.

Core Web Vitals (LCP, FID, CLS)

In 2021, Google introduced Core Web Vitals as part of its Page Experience update. These are specific metrics Google uses to evaluate a user’s experience on your site:

- LCP (Largest Contentful Paint): How fast the main content loads. Ideal time: under 2.5 seconds.

- FID (First Input Delay): How quickly your site responds to user interactions. Ideal time: under 100 ms.

- CLS (Cumulative Layout Shift): Measures visual stability. No unexpected layout shifts.

One of our eCommerce clients had a poor LCP score due to oversized banner images. We resized the images, set proper dimensions, and deferred offscreen elements. Not only did they pass the Core Web Vitals test, but their ranking for high-volume product keywords jumped from page 2 to page 1 — all because we followed the exact steps from our internal Technical SEO Guide.

Techniques: Image Compression, Caching, Lazy Loading

Here’s where technical fixes start making a major impact:

- Image Compression: I always recommend using tools like TinyPNG or WebP format to compress images without losing quality. One client’s homepage dropped from 4MB to under 1.5MB just by compressing graphics — and their load time improved by nearly 2 seconds.

- Caching: Browser caching stores website resources locally, reducing load time on repeat visits. Every Technical SEO Guide I create includes configuring caching rules using tools like WP Rocket, Cloudflare, or manual .htaccess directives.

- Lazy Loading: This technique ensures images and videos only load when a user scrolls to them. It’s especially useful for long-form pages or blog posts with multiple media files. We implemented lazy loading on a blog-heavy portal, and it immediately cut load times by 35% — all with minimal development effort.

If you want to win at SEO in today’s competitive space, site speed is non-negotiable. From improving user experience to passing Core Web Vitals and securing better rankings, the benefits are massive. I always say: a fast site feels modern, trustworthy, and user-first — and that’s exactly what Google rewards.

This Technical SEO Guide is designed to give you the exact steps to improve performance without wasting time or budget. And from my personal experience, I can tell you — speed optimization is one of the few changes that deliver immediate results, both in rankings and in revenue.

Also Read: SEO Glossary: Terms & Definitions [Learn Digital Marketing]

Technical SEO Guide to Mobile Optimization & HTTPS

One thing I’ve learned over the years as a digital marketer is this: if your website doesn’t perform well on mobile, it won’t perform at all. And if it’s not secure, users won’t trust it — and neither will Google. That’s why this part of the Technical SEO Guide focuses on two of the most important technical pillars for SEO success today: mobile optimization and HTTPS.

Mobile-First Indexing Explained

Google officially switched to mobile-first indexing in 2019. That means Google primarily uses the mobile version of your site for indexing and ranking — not the desktop version. I’ve seen this affect dozens of businesses who were still optimizing only for desktop and wondering why their rankings dropped.

One of our clients, a local service provider, had a desktop-optimized site that looked terrible on mobile — tiny text, broken layouts, and overlapping content. We redesigned their entire site using a responsive framework and ensured it passed Google’s Mobile-Friendly Test. Within 30 days, they saw a 25% boost in mobile traffic and significantly better engagement.

That’s why this Technical SEO Guide treats mobile-first indexing as a core requirement — not an optional feature.

Mobile Usability and Responsive Design

Responsive design isn’t just a buzzword — it’s critical for both UX and SEO. When I work with any business, especially e-commerce or service-based sites, I always test for:

- Tap targets and spacing

- Font size and readability

- Viewport configuration

- Layout adaptability on different screen sizes

At Digital Marketing Marvel, we once helped an educational institute whose mobile bounce rate was nearly 80%. After redesigning their site with a clean, responsive UI and optimizing for load speed on mobile, the bounce rate dropped by 40%, and their mobile conversions tripled.

In this Technical SEO Guide, I always stress that a responsive website not only pleases users — it makes Google’s job easier, leading to better indexing and higher rankings.

HTTPS and SSL Certificates as Trust and Ranking Factors

Security is now a mandatory ranking factor. Google clearly favors secure websites and displays a “Not Secure” warning for those lacking HTTPS. From an SEO and user trust perspective, this is huge.

We once onboarded a client in the health sector who was still using HTTP. Users were hesitant to submit inquiry forms, and their bounce rate was high. After installing an SSL certificate and moving them to HTTPS, we noticed two things almost immediately:

- Their form conversion rate increased by 35%

- Their average keyword ranking improved within 3 weeks

This wasn’t magic — it was a direct result of implementing what we outline in every Technical SEO Guide: securing your site with HTTPS is no longer optional. It builds trust, ensures data privacy, and aligns your site with modern SEO standards.

In today’s digital landscape, mobile optimization and security are at the heart of technical SEO. If your site isn’t fast, functional, and safe on mobile, you’re going to lose both users and rankings.

My advice? Don’t wait for penalties or lost traffic to make these changes. Follow the mobile and HTTPS best practices in this Technical SEO Guide, and you’ll not only protect your site — you’ll future-proof your entire SEO strategy.

Technical SEO Guide to Structured Data & Schema Markup

When I first discovered structured data, I didn’t realize how powerful it could be. But after seeing how schema markup can transform how your content appears in Google — with star ratings, FAQs, event details, and more — I started implementing it in almost every SEO project at Digital Marketing Marvel. And the results have been nothing short of impressive. This section of the Technical SEO Guide covers what structured data is, why it matters, and how to apply it effectively.

What Is Structured Data and Why It Matters

Structured data is a standardized format that helps search engines better understand your content. It uses schema.org vocabulary, which allows you to describe elements on your page — like product details, reviews, FAQs, recipes, and even job postings — in a language Google and other search engines can understand.

In one project, we added structured data to an article listing the “Top 10 Digital Marketing Tools.” Within weeks, that blog post started showing FAQ-rich snippets in search results, and the click-through rate (CTR) jumped by 28% — without changing a single word of the content. That’s why structured data is a key component of this Technical SEO Guide. It’s not just about being found — it’s about standing out.

Common Types of Schema Markup (And When I Use Them)

There are dozens of schema types, but here are a few I’ve used most effectively in real campaigns:

- FAQ Schema: I always recommend this for blogs, service pages, or knowledge-base content. When implemented correctly, it can trigger FAQ rich snippets and expand your listing on Google.

- Product Schema: For e-commerce sites, product schema displays prices, availability, and ratings — all directly in the search results. We added this for a client selling fitness equipment, and within a month, product listings began appearing with star ratings and pricing, which increased conversion rates significantly.

- Article Schema: I use this for blogs and news-style content. It helps Google understand that the content is informative, timely, and relevant. It also improves eligibility for Google Discover.

We’ve also implemented Breadcrumb, Event, and Local Business schemas for clients in hospitality, events, and local service niches — all outlined in our in-house Technical SEO Guide.

Tools and Methods to Implement Schema

There are multiple ways to implement schema markup depending on your platform and skill level:

- Manual JSON-LD Code: This is Google’s recommended format. If you’re comfortable with code, you can insert JSON-LD scripts directly into your page HTML. I often do this for custom-built websites.

- Plugins (for WordPress): I use tools like Rank Math or Schema Pro to automate schema generation. These are ideal for beginners who want results without coding.

- Google’s Rich Results Test: Once schema is added, I always run this test to check if Google can read it correctly and whether the page is eligible for rich results.

In every Technical SEO Guide we deliver to clients, we include a schema strategy — customized by page type, content goals, and audience. And time and time again, I’ve seen rich results lead to higher CTRs, better rankings, and deeper user engagement.

If you’re still not using structured data, you’re missing a huge opportunity to differentiate your website in search results. This Technical SEO Guide emphasizes that schema markup is one of the most underused yet impactful strategies in SEO today. It helps Google understand your content better and rewards you with more visibility — often with very little effort.

Add schema. Test it. Monitor results. Trust me — the boost is worth it.

Technical SEO Guide to Redirects & Error Handling

Redirects and error handling might seem like boring backend tasks, but let me tell you — they can make or break your SEO performance. Over the years at Digital Marketing Marvel, I’ve seen websites lose massive traffic simply because of poor redirection practices or broken internal links. That’s why this part of the Technical SEO Guide focuses on setting up redirects the right way and fixing common crawl errors before they damage your rankings.

Understanding 301 and 302 Redirects

Let’s start with the basics. A 301 redirect is permanent. It tells search engines that a page has moved to a new location, and it passes around 90–99% of the SEO value (link equity) to the new URL. A 302 redirect is temporary and doesn’t pass much SEO authority.

I remember working with an online coaching platform that had redesigned their website and used 302 redirects everywhere. As a result, their rankings dropped, and Google wasn’t passing authority from their old high-ranking URLs to the new pages. We switched all appropriate redirects to 301s, and within a few weeks, we recovered nearly 70% of their lost keyword positions. That’s how important proper redirection is — and why it’s a key chapter in this Technical SEO Guide.

Fixing 404 Errors and Broken Links

Nothing annoys users — or Google — more than clicking a link and landing on a 404 error page. These errors hurt crawl efficiency, damage user experience, and can dilute the authority of your internal linking structure.

In every Technical SEO Guide I create, one of the first audit steps is checking for 404 errors using tools like Screaming Frog, Ahrefs, or Google Search Console. We recently worked on a fashion brand’s site with over 1,200 404 errors due to deleted seasonal product pages. We fixed this by:

- Redirecting relevant old URLs to new or related pages

- Removing outdated internal links

- Creating a custom 404 page with navigation links and a search bar

The result? A 20% increase in pages indexed by Google and a noticeable drop in bounce rate — all by simply cleaning up what most people ignore.

Redirect Chains and Loops: The Hidden Killers

Redirect chains occur when one URL redirects to another, which then redirects to yet another — creating a multi-step chain. This slows down crawling and may even prevent some bots from reaching the final destination. Redirect loops are worse — they send bots (and users) in endless circles.

We once audited a tech blog where their HTTPS migration had created over 500 redirect chains due to improper implementation. Some pages went through 4–5 redirects before loading. After simplifying and cleaning those up, we saw a clear improvement in crawl speed and a 15% boost in organic traffic in just two weeks.

That’s why in this Technical SEO Guide, I always emphasize: use a single 301 redirect when necessary, and avoid chaining at all costs.

Redirects and error handling may not be glamorous, but they’re absolutely essential to maintaining a healthy SEO foundation. Small mistakes like using the wrong redirect type, ignoring 404s, or stacking chains can lead to big problems.

With this Technical SEO Guide, my goal is to help you spot and fix these issues early — before they hurt your rankings, confuse crawlers, or push users away. If you clean up your redirects and fix your errors, you’ll not only improve SEO performance — you’ll create a smoother, faster, and more trustworthy user experience.

Technical SEO Guide to XML Sitemaps & Robots.txt

If search engines are like visitors coming to explore your website, then the XML sitemap is the map you hand them, and the robots.txt file is the list of do’s and don’ts. Over the years, I’ve realized that many websites lose traffic simply because Google can’t properly understand which pages to crawl — or worse, they accidentally block important sections. That’s why this section of the Technical SEO Guide dives into the crucial role of sitemaps and robots.txt in controlling and optimizing crawl behavior.

Purpose of an XML Sitemap

An XML sitemap is essentially a list of all the URLs you want search engines to discover and index. It helps search bots find pages faster — especially new content, deep pages, or URLs that don’t have many internal links.

One of our clients, a law firm, had published 100+ service and blog pages over the years, but their visibility was poor. When we audited the site, we found that many of those URLs weren’t even included in the sitemap, and Google had never crawled them. We updated the sitemap, submitted it in Search Console, and within a month, nearly 40% more pages were indexed, leading to a steady increase in impressions and traffic.

That’s why, in every Technical SEO Guide we build, the sitemap is a critical first step to improving discoverability.

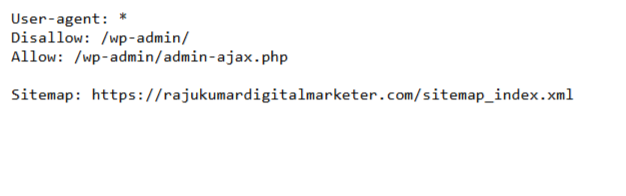

Robots.txt Configuration Basics

The robots.txt file is a text file placed at the root of your website to instruct search engine bots which pages or folders to crawl and which to ignore. But here’s the catch — a small mistake here can block your entire site from being indexed.

I once audited a fitness blog where the robots.txt file had this line:

Disallow: /

This single line told bots not to crawl anything on the site. As a result, none of their new content was being indexed for months. We fixed it immediately, resubmitted the sitemap, and started seeing crawl activity spike within 48 hours.

Your Technical SEO Guide should always include:

- Allowing essential pages like product, blog, and service URLs

- Blocking admin pages, cart or checkout flows, and duplicate paths

Adding the sitemap URL at the bottom of the robots.txt file:

Sitemap: https://www.example.com/sitemap.xml

How to Use Both for Better Crawl Control

Here’s how I use XML sitemaps and robots.txt together for maximum effect — and how you can too, by following this Technical SEO Guide:

- Use the robots.txt file to block irrelevant or sensitive content (like /wp-admin/, search results pages, or filters).

- Use the XML sitemap to highlight only high-value, crawl-worthy pages.

- Keep both files updated whenever you launch new pages or sections.

- Regularly test using Google Search Console’s URL Inspection Tool and the robots.txt Tester.

When we followed this approach for a multi-location client, Googlebot began prioritizing the right service and city-specific pages. The client saw a 34% rise in local keyword rankings within two months — all without creating any new content. It was pure technical cleanup.

Sitemaps and robots.txt may seem simple, but they’re critical to how Google understands and navigates your site. Misconfigurations can block visibility, while proper setup can significantly boost indexation and ranking.

That’s why I never skip this section in my Technical SEO Guide. When done right, these two files give you control, clarity, and consistency in how your website performs in search engines. And in my experience, that control is what separates a struggling site from one that ranks like a pro.

Technical SEO Guide to Advanced Concepts

Once you’ve nailed the basics of technical SEO, it’s time to go deeper. Over the years at Digital Marketing Marvel, I’ve worked on complex SEO projects involving international websites, dynamic platforms, and massive content duplication challenges. That’s where these advanced concepts come in — and why this part of the Technical SEO Guide is focused on hreflang tags, canonicalization, and JavaScript SEO.

Hreflang Tags for Multilingual SEO

If your website targets users in different languages or regions, hreflang tags are a must. They help Google understand which version of your content to show users based on their language and location. Without them, you risk content duplication or showing the wrong version of a page to the wrong audience.

I worked with a travel company that had versions of their site in English, French, and Spanish — but none of them had hreflang implemented. Google was confused, and rankings were inconsistent across regions. We added the appropriate hreflang attributes for each language-region combination (like hreflang=”fr-fr” for French speakers in France), and the result was a 20% increase in organic traffic from their international audience within six weeks.

That’s why any complete Technical SEO Guide for global websites must include a section on hreflang implementation.

Canonicalization to Fix Duplicate Content

Duplicate content is one of the most common issues I encounter during technical audits. Sometimes it’s due to URL parameters (like filter or sort options), and sometimes it’s because the same content appears under multiple categories. That’s where canonical tags come into play.

A canonical tag tells Google which version of a page is the “master” or preferred version. Without it, you risk splitting your ranking signals across multiple duplicate pages.

One of our clients, an e-commerce brand, had thousands of product URLs with duplicate descriptions due to color and size variants. We applied canonical tags pointing to the primary product page, and within a month, we saw more consistent rankings and a 32% improvement in organic impressions.

Every Technical SEO Guide I write includes canonicalization strategies to ensure that search engines don’t penalize or dilute content authority.

JavaScript SEO and Dynamic Content Rendering

This is where things get even more technical. Many modern websites — especially SaaS platforms and apps — rely heavily on JavaScript frameworks like React, Angular, or Vue.js. While these frameworks can create fast and engaging user experiences, they can also create SEO problems if Google can’t crawl or render the content properly.

I once worked with a startup whose homepage content was entirely loaded via JavaScript. When we tested it in Google Search Console’s URL Inspection Tool, it was clear: Googlebot couldn’t see any of the main content. Their site looked great to users but was practically invisible to search engines.

We solved it by implementing server-side rendering (SSR) and ensuring all important content was rendered in the initial HTML response. Within 3–4 weeks, critical pages were indexed and started ranking.

That’s why this Technical SEO Guide covers JavaScript SEO — including best practices like:

- Progressive enhancement

- Dynamic rendering (as a fallback)

- Using <noscript> tags for fallback content

- Pre-rendering important pages

If your site relies on dynamic content, you absolutely must test how search engines are seeing it — or risk being left out of the rankings altogether.

Technical SEO is no longer just about fixing 404s or improving page speed. For serious growth, you need to go beyond the basics. Hreflang, canonicalization, and JavaScript rendering are advanced techniques that I’ve used to help clients scale globally, clean up messy architecture, and make modern frameworks search-friendly.

This Technical SEO Guide isn’t just about theory — it’s built from real projects, real challenges, and real wins. And if you implement these advanced concepts correctly, you’ll be way ahead of most competitors in your space.

Technical SEO Guide to Monitoring & Tools

If there’s one thing I’ve learned in my years of doing SEO, it’s this: Technical SEO is never a one-time fix. Even after optimizing everything — from redirects to sitemaps — your site can break, change, or be affected by a new update. That’s why this part of the Technical SEO Guide is dedicated to ongoing monitoring and the essential tools I use to keep every project running smoothly.

Overview of Key SEO Tools

There are plenty of tools out there, but here are the ones I personally use every single week:

- Google Search Console (GSC): The most important free tool. It helps me monitor crawl errors, index coverage, Core Web Vitals, and even mobile usability. I always link every project to GSC from day one — no exceptions.

- Screaming Frog SEO Spider: This is my go-to crawler for running deep technical audits. It mimics how search engines crawl your website and flags issues like 404 errors, duplicate content, missing meta tags, and more. For every Technical SEO Guide I create, Screaming Frog is part of my baseline audit checklist.

- Ahrefs & Semrush: These tools go beyond backlink tracking. I use them to find broken links, monitor technical health, track keyword cannibalization, and get alerts on SERP drops.

- PageSpeed Insights & GTmetrix: For performance testing and Core Web Vitals monitoring. They’re great for identifying LCP, FID, and CLS issues.

- Google Tag Manager + GA4: For deeper user behavior tracking and debugging site scripts without touching code every time.

I always tell clients: You don’t need 20 tools. You need 4–5 good ones and a strategy to act on what the data tells you. That’s exactly what this Technical SEO Guide is designed to give you.

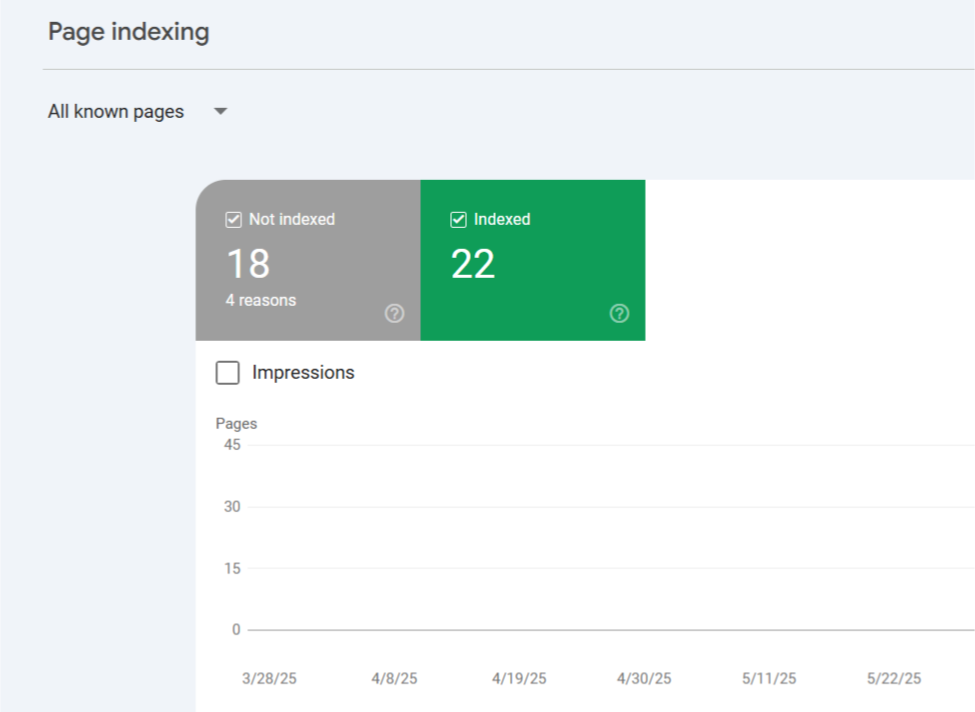

Tracking Crawl Stats, Indexing Status & Performance

Once you’ve set up your site correctly, the next job is to track how Google is interacting with it. In Google Search Console, I routinely check:

- Crawl stats: To monitor how often Googlebot visits the site and spot spikes in crawl errors.

- Indexing status: To ensure new pages are getting indexed and old pages aren’t deindexed accidentally.

- Performance reports: To track impressions, clicks, and CTR across different devices and countries.

For one client, we noticed a sudden drop in indexed pages on GSC — it turned out that a developer had added a noindex tag to their blog templates by mistake. We caught it within a day, fixed it, and avoided a massive traffic loss.

That’s why continuous monitoring is more than just good practice — it’s mission-critical. This Technical SEO Guide isn’t just about fixing problems, but about detecting them before they become disasters.

Setting Up Alerts for Technical Issues

I always recommend automating alerts so that you’re not manually checking tools every day. Here’s what we set up for clients:

- GSC email alerts for indexing issues, security warnings, or spikes in errors.

- Screaming Frog crawl schedule (weekly or biweekly) with exports sent directly to our email.

- StatusCake or Uptime Robot for website downtime monitoring.

- Ahrefs/Semrush site audit alerts for sudden changes in technical health or backlink profile.

These automated alerts have saved us more times than I can count. Whether it’s a broken redirect, a new crawl error, or a site outage, we’re able to take action quickly — and keep SEO momentum strong.

Technical SEO success isn’t just about knowing what to fix — it’s about knowing when things break and having a system to fix them fast. That’s what this Technical SEO Guide delivers: a proactive approach to monitoring your website’s technical health, supported by the right tools and workflows.

Whether you’re a beginner or managing enterprise-level SEO, these tools and monitoring practices give you control, clarity, and peace of mind — because in SEO, the real wins come from consistency.

Technical SEO Guide to Checklist and Best Practices

By now, we’ve covered everything from crawling and site speed to advanced strategies like JavaScript SEO and hreflang. But even the most well-optimized website needs consistent upkeep to stay at the top. That’s why this part of the Technical SEO Guide focuses on a complete checklist and the best practices I personally follow at Digital Marketing Marvel.

Technical SEO Checklist Essentials You Should Not Miss

This is the same checklist I use during every technical SEO audit. It helps ensure no critical factor is overlooked.

Crawlability and Indexing

- Submit XML sitemap to Google Search Console

- Set up robots txt correctly without blocking important pages

- Ensure no critical pages are set to noindex

Site Architecture

- Maintain a flat and logical site structure with minimal click depth

- Use internal linking with relevant keywords as anchor text

- Enable breadcrumbs for easier navigation and hierarchy

Performance and Mobile Optimization

- Load pages in under 2.5 seconds

- Pass all Core Web Vitals including Largest Contentful Paint First Input Delay and Cumulative Layout Shift

- Ensure responsive design for all device sizes

- Use HTTPS for secure browsing

Structured Data

- Implement schema markup for key pages including FAQ Product and Article types

- Test structured data using Google’s Rich Results testing tool

- Regularly update schema as new content is added

Redirects and Errors

- Use 301 redirects for permanent changes not 302

- Eliminate redirect chains and loops

- Fix all broken links and 404 errors

Advanced Optimization

- Use hreflang tags for multi-language websites

- Add canonical tags to fix duplicate content issues

- Ensure JavaScript-rendered content is crawlable and indexable

This checklist is the backbone of every Technical SEO Guide we create for clients and it’s been tested across different industries and platforms.

Routine Audits and Testing

Technical SEO is not a one-time fix. I recommend:

- Running a full technical audit every 30 to 60 days

- Monitoring crawl stats and index coverage weekly in Google Search Console

- Testing Core Web Vitals regularly especially after updates to code or design

- Reviewing mobile usability and performance on top landing pages monthly

This kind of maintenance is what keeps a site healthy and ranking consistently over time.

Staying Updated with Google Algorithm Changes

One thing I’ve learned as a digital marketer is that search algorithms are always evolving. Whether it’s a core update or a minor tweak Google constantly changes the rules.

To stay ahead I follow official sources like Google Search Central and monitor industry updates. I also test affected pages using Search Console and PageSpeed tools whenever there is an algorithm shift. We update our own Technical SEO Guide every few months to reflect these changes and keep our clients ahead of the curve.

For example after the 2023 Helpful Content Update we made key adjustments to schema usage and UX signals which helped multiple clients recover lost traffic and improve user engagement.

Technical SEO is what keeps your entire SEO machine running. This Technical SEO Guide provides a complete checklist and strategy that’s based on my hands-on work with businesses across different sectors.

If you want sustainable search performance technical optimization must be part of your ongoing digital strategy. Follow the checklist test regularly and adapt as search engines evolve — and you’ll stay far ahead of competitors who ignore the technical foundation.

Technical SEO Guide to Case Studies and Real-World Examples

Theory is useful, but nothing beats the power of real-world proof. Over the past few years at Digital Marketing Marvel, I’ve seen firsthand how the right technical changes can revive stagnant websites, boost rankings, and unlock new growth. In this part of the Technical SEO Guide, I’m sharing some actual examples of success stories, what we fixed, and the results that followed.

Example One Ranking Jump After Fixing Crawl and Indexing Issues

A legal services website approached us with a major problem. Their blog section had over 80 well-written posts, but only a handful were indexed by Google. After a detailed technical audit, we found that their robots txt was blocking the entire blog folder. Their sitemap also didn’t include any blog URLs.

We updated the robots txt file to allow crawling and rebuilt the XML sitemap to include all relevant blog pages. We submitted the sitemap in Google Search Console and requested indexing.

Within four weeks over 75 percent of their blog posts were indexed and started ranking. Organic traffic grew by 42 percent in just two months. This case reinforced a core principle of this Technical SEO Guide — visibility starts with crawlability.

Example Two Common Errors Fixed During Routine Audits

A mid-sized ecommerce store had seen a slow decline in traffic despite no major changes to their content. During our technical audit we discovered:

- Hundreds of 404 errors due to outdated category pages

- Multiple redirect chains from an earlier HTTPS migration

- Canonical tags missing on key product pages

- Slow loading times on mobile due to uncompressed images

We resolved all these issues in phases starting with redirect cleanup and then moving to canonicalization and performance optimization.

After implementation the site experienced a 31 percent increase in organic sessions and a 26 percent boost in average keyword rankings. More importantly bounce rate dropped and pages per session improved — indicators that users were finally getting a smoother experience.

This case reminded me why regular audits are a must and why this Technical SEO Guide puts such strong emphasis on error handling and crawl efficiency.

Example Three Measurable Gains from Structured Data and Speed Improvements

An education portal offering online courses was publishing great content but failing to stand out in search results. We implemented structured data including article schema and FAQ schema across their blog and course pages. At the same time we optimized page speed using image compression lazy loading and improved hosting configuration.

The result was impressive. Within six weeks the website started receiving rich snippets in Google for more than 60 pages and click-through rates increased by over 30 percent. Their mobile Core Web Vitals scores moved from failing to passing across the board. Overall organic traffic rose by 45 percent and average time on site increased by 18 percent.

All of this came from applying a focused technical strategy built from this very Technical SEO Guide — no new content no paid ads just smart optimization.

Each of these examples proves what I always tell clients — technical SEO is not just a background task. It is a growth engine. Whether it’s fixing crawl errors updating your schema or speeding up your site the results are real and measurable.

This Technical SEO Guide is built from these exact experiences. The strategies I’ve shared are not just best practices they are battle-tested solutions that drive results. If you want to turn your website into a performance machine start with the technical foundation — and don’t overlook the power of a well-executed audit.

Conclusion & Next Steps in Your Technical SEO Guide

Technical SEO is not about shortcuts or hacks. It’s about making your website easier to crawl, faster to load, more secure to browse, and structured in a way that search engines love.

This Technical SEO Guide reflects everything I practice at Digital Marketing Marvel. It’s not just about rankings — it’s about building a website that performs better, earns trust, and scales organically over time.

If you’re serious about growing your digital presence, take technical SEO seriously — and let this guide be your roadmap to getting it right from the start.

From Panda to Helpful Content: A Timeline of Major Google SEO Updates - RKDMT - Raju Kumar Digital Marketing Trainer (Digital Marketing Institute) | Learn Digital Marketing

[…] Also Read: Technical SEO Guide: Complete Outline for Beginners […]

Learn Digital Marketing and Become Job-Ready in 90 Days

[…] Technical SEO […]